OpenAI Chat is no longer just a chatbot—it’s evolving into a full-fledged AI agent that can browse the web, run complex research tasks, and even make purchases. And if you’re a SaaS founder or developer, now’s the time to pay attention.

With the release of tools like Operator, Deep Research, and the Model Context Protocol (MCP), OpenAI has unlocked the next level of intelligent automation. These aren’t just incremental upgrades—they’re foundational shifts. You’re no longer limited to conversational responses. You can now build AI agents that act, learn, and execute workflows independently.

Imagine giving your users a custom-built assistant that finds real-time data, files expenses, and books meetings—on autopilot.

In this post, we’ll unpack exactly how these new capabilities work, what they mean for your software stack, and how to get started today. Whether you’re building a SaaS platform, internal tool, or next-gen assistant, this is your roadmap to the future of AI-powered development.

Table of Contents

- What Are OpenAI Chat Agents?

- Deep Dive into Operator

- Exploring Deep Research

- Understanding the Model Context Protocol (MCP)

- Putting It All Together: Build Your Own OpenAI Chat Agent

- Why It Matters for Custom Software Development

- Challenges & Best Practices

- Conclusion: From Chatbot to Agent — The Next Leap in AI

- FAQS

What Are OpenAI Chat Agents?

OpenAI Chat agents represent a paradigm shift in how we interact with artificial intelligence. Instead of static, prompt-response interactions, OpenAI is building toward agentic AI—models that autonomously plan, reason, and take action on behalf of users.

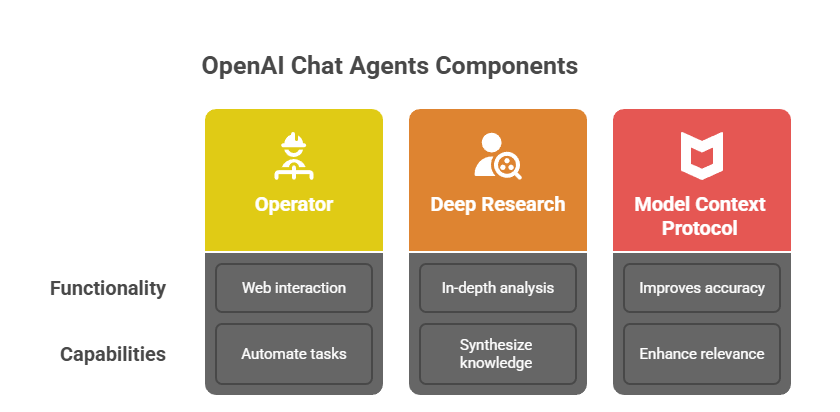

At the heart of this shift are three major components:

- Operator – A tool that enables AI agents to browse the web, click buttons, fill out forms, and even make purchases through a headless browser.

- Deep Research – A feature that allows GPT models to conduct multi-step research, summarize findings, run code, and provide source citations.

- Model Context Protocol (MCP) – An open standard that lets developers feed AI models contextual data in a structured, machine-readable way.

These capabilities mark a move toward autonomous, multi-tool AI systems, designed to function like skilled digital employees. They can complete tasks such as filing expense reports, generating market research, or assisting users with onboarding—without constant human input.

For developers, this unlocks a powerful new toolset for building highly personalized, proactive, and intelligent applications—far beyond traditional chatbot functionality.

Deep Dive into Operator

Operator is OpenAI’s boldest step yet toward making GPT models truly useful in the real world. At its core, Operator is a new tool that gives GPT agents the ability to interact with the web in real time—through a secure, headless browser interface.

With Operator, your AI agent can:

- Navigate websites

- Click buttons

- Fill out forms

- Scrape data

- Execute transactions (e.g., booking flights or making purchases)

Real-World Use Cases

- Expense filing: Upload receipts, autofill company forms, and submit reports.

- Online shopping: Compare prices, select delivery options, and place orders.

- Customer support automation: Navigate internal knowledge bases to provide real-time help.

This turns the chatbot into something more like a personalized assistant or junior employee, capable of completing end-to-end workflows.

OpenAI has started rolling out Operator ( OpenAI: Introducing Operator ) to pro and team users via the ChatGPT interface. Developers can expect broader access through the Responses API, allowing you to embed these capabilities into your own products. WSJ: OpenAI’s Operator Agent in Action

Operator runs in a sandboxed environment and is designed with user approval flows and tool permissioning, offering a balance between power and safety.

Exploring Deep Research

If Operator gives GPT a browser, Deep Research gives it a brain for critical thinking.

Deep Research is a new capability that lets GPT agents autonomously research topics, cross-reference sources, run code, and return reliable, cited results—all within a single multi-step workflow. It’s like having a junior analyst or researcher built into your product.

What It Can Do

- Analyze across sources: Pull data from multiple sites and summarize consensus

- Write and run code: Perform statistical analysis or visualizations

- Generate citations: Link to original sources, even in markdown or academic format

For developers, this means you can now build AI tools that synthesize knowledge, not just regurgitate it.

Example:

A SaaS platform for startup founders could use Deep Research to:

- Analyze competitors

- Compare funding data from Crunchbase, TechCrunch, and PitchBook

- Create a weekly digest—fully automated and sourced

Deep Research is currently available via OpenAI’s Responses API. The best part? You can chain these tasks together, enabling true autonomous research agents with guardrails in place.

Understanding the Model Context Protocol (MCP)

One of the biggest breakthroughs enabling agentic AI is the Model Context Protocol (MCP)—and while it sounds technical, its goal is simple: standardize how AI models receive and understand external context.

Think of MCP as a shared language between your software and the AI agent.

What Is MCP?

MCP is an open-source protocol backed by OpenAI, Anthropic, and other major players. It allows developers to define structured “contexts” for models—such as user data, documents, or tasks—so they can process and respond with better accuracy and relevance.

Why It Matters

Before MCP, developers had to stuff everything into a long prompt. With MCP, you can:

- Feed structured context directly to the model

- Dynamically link content from internal tools or CRMs

- Update what the model sees in real time

This is especially powerful when building tools like:

- Custom onboarding assistants

- AI customer success agents

- Data-aware chatbots that reference live metrics

OpenAI has already implemented MCP in its Responses API, giving developers a cleaner, more scalable way to control model behavior—without prompt engineering hacks.

Putting It All Together: Build Your Own OpenAI Chat Agent

With Operator, Deep Research, and MCP in your toolkit, you’re now able to build your own OpenAI Chat agent—an autonomous, browser-enabled assistant that thinks critically and acts with context.

Here’s What You Need

- Responses API – The backbone that powers interaction with GPT-4o and custom tools

- MCP Server – Hosts the structured context your agent will use

- OpenAI Agent SDK – Lets you define your agent’s capabilities and behavior

- Cookbook Examples – Start from OpenAI’s prebuilt templates and extend

Sample Use Case: Travel Assistant Agent

- Define tools – Search flights, scrape weather, book hotels

- Set up MCP – Feed it user preferences, budget, frequent flyer data

- Chain Deep Research – Compare travel options from multiple sources

- Use Operator – Navigate to booking sites and finalize the purchase

In less than 100 lines of code, developers have already built agents that:

- Create blog posts from scratch

- Monitor legal documents and highlight risks

- Conduct market analysis with citations

Check out OpenAI’s Cookbook and GitHub agent templates for working examples.

Why It Matters for Custom Software Development

For software development teams, especially those building SaaS platforms, OpenAI Chat agents offer a massive leap in product capability, automation, and user experience.

Here’s Why This Changes the Game:

1. Smarter Features, Less Code

Instead of building complex logic flows or microservices, you can now delegate entire tasks to an AI agent that:

- Reads your data

- Understands user preferences

- Acts autonomously

This drastically reduces development time while increasing feature richness.

2. True User Personalization

MCP allows agents to personalize outputs based on live context—such as CRM data, purchase history, or behavioral patterns. The result? AI that behaves more like a real assistant than a chatbot.

3. Cost Efficiency at Scale

AI agents can handle support tickets, onboarding, research, and QA tasks—without the cost of hiring or training new staff.

4. Faster Time to Market

With tools like the Agent SDK and OpenAI’s modular APIs, teams can prototype, test, and deploy new AI features in days instead of months.

For Skywinds clients, these tools unlock the power to go from idea to agent faster than ever, transforming both backend logic and user-facing experiences.

Challenges & Best Practices

While OpenAI Chat agents are powerful, they’re not plug-and-play magic. Developers must consider security, reliability, and user trust when embedding these agents into real-world applications.

Key Challenges:

1. Prompt Injection & Tool Misuse

If not sandboxed properly, malicious users can hijack agent behavior by injecting deceptive prompts. This risk grows when agents interact with tools like browsers or payment systems.

2. Data Leakage

Without proper permissions and data filtering, agents could inadvertently expose private user data, internal links, or credentials.

3. Unpredictable Behavior

Autonomous agents may take unintended actions—especially if tools are chained across multiple steps.

Best Practices:

- Tool Permissions: Clearly define what each tool (like Operator) can do, and log all actions.

- User Approval Flows: Require manual confirmation before agents take sensitive actions like purchases or deletions.

- Context Scoping with MCP: Limit what the model can see to only what’s relevant—don’t overload it.

- Testing with Sandboxes: Run agents in dev environments with fake data before production rollout.

- Regular Audits: Review prompts, context feeds, and agent behavior logs regularly.

By building safely, you gain trust—and trust is what makes AI truly valuable to your end users.

Conclusion: From Chatbot to Agent — The Next Leap in AI

The shift from static chat to autonomous, multi-tool agents is more than just a technical upgrade. It’s a transformation in how software gets built, how users interact with AI, and how companies scale without scaling headcount.

With Operator, you give AI the power to act.

With Deep Research, you give it the power to think.

With MCP, you give it the power to understand context.

Together, these tools turn GPT into a truly intelligent system—one that can solve problems, carry out tasks, and learn from each interaction.

For software teams, this is your moment. Whether you’re building customer support tools, onboarding flows, analytics dashboards, or internal automations—OpenAI Chat agents can become the backbone of smarter, faster, more human-like software.

Want to build your own ChatGPT-powered agent?

Skywinds can help you architect and deploy custom OpenAI agents using Operator, Deep Research, and MCP—securely and at scale.

Let’s bring your AI assistant to life. Contact us at skywinds.tech

FAQS

1. What is an OpenAI Chat agent?

An OpenAI Chat agent is an autonomous AI system that can browse websites, analyze data, and complete tasks using tools like Operator, Deep Research, and MCP.

2. How do I build a custom AI assistant with OpenAI tools?

You can build a custom AI assistant using OpenAI’s Responses API, Agent SDK, and Model Context Protocol to define behavior, context, and tools.

3. What is the OpenAI Operator and what does it do?

Operator is a tool that allows GPT to interact with web pages—click, fill forms, scrape data, and even make purchases via a headless browser.

4. Is MCP necessary to build an OpenAI Chat agent?

Yes, MCP helps AI agents understand structured context like user data, making them more accurate and controllable for developers.

5. Can OpenAI Chat agents be used in SaaS products?

Absolutely. They’re ideal for automating onboarding, support, research, and personalized workflows in SaaS platforms and internal tools.